VeriSight

A downloadable Software for Android

Inspiration

The only way for people with schizophrenia to know if they are hallucinating is to either be skeptical of what they are seeing or to have an external force tell them.

A common treatment option that patients with schizophrenia utilize is service dogs. A service dog can be tasked to "greet" somebody whom they suspect is a hallucination. Another trick that has been discovered is to open up your phone’s camera and point it at the thing the patient thinks could be a hallucination. However, none of these solutions are truly non-intrusive for the schizophrenic patient to use daily.

What It Does

The app is a bridge between these two external forces, but built inside mixed reality.

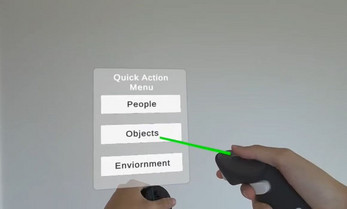

Verisight has two features to validate your environment. With the click of a button, sense your surroundings and detect all the people in the space around you. A blue outline of a person is projected on top of all detected people. No outline could mean that what the user is seeing is not there! The other is an AI agent that can describe the environment around you. Hold down the trigger, ask it any question regarding what you're looking at, and get a response in seconds. As you talk while holding the trigger, you can also see an audio visualizer in real-time, confirming your input. I've also implemented a quick action menu consisting of 'People', 'Objects', and 'Environment'. By simply clicking on one of these three buttons, the AI agent can help you describe the subject you want to focus on. For example, clicking on 'People' will allow the AI to tell you about the people in the environment, how many there are, and what they are doing. What if there are no people in the environment? No worry! The AI will correct you by telling you no one is in your environment. This quick action feature is designed to push the non-intrusive nature of VeriSight to the next level. For example, if the user doesn't want to talk to the AI because there are people around them, they can simply use the quick action menu to ask about their surroundings!

How VeriSight is Built

VeriSight uses Unity's Passthrough API, Sentis (Unity's real-time ML model), and Gemini's Live API.

The Unity Sentis model was responsible for real-time object recognition by pressing the 'A' button on the right controller. The model is trained on over 80 objects, which is sufficient to fulfill the patient's demand.

To implement Google Gemini, we had to hijack both the passthrough image data and voice recording data from the Quest headset. Since Unity doesn't have a websocket that can directly send data to Gemini, we built our own Python server running the Gemini API key and sent the data through the server instead. We then ran the model using both the prompt and the photo as the inputs. The response gets converted into the Gemini Speech-to-Speech Model, where it gets transferred back into the Quest and gets played through the headset. This provides an experience very similar to Meta Ray-Bans — you can look anywhere in your environment and ask any question about it.

For the audio visualizer, when you press and hold the trigger on your VR controller, the system begins recording your voice through the Quest’s built-in microphone. As your voice comes in, it's routed to Unity’s internal AudioSource, which plays the audio silently (volume is near-zero, just enough to extract data). Every frame, the audio visualizer pulls data from the microphone stream using a Fast Fourier Transform (FFT). FFT is a mathematical algorithm that transforms audio from its time-based waveform (how loud it is over time) into a frequency spectrum (what pitches or tones are present at any given moment). In Unity, we use: AudioSource.GetSpectrumData(...) to perform this FFT internally. It samples a chunk of audio (e.g. 64 samples), applies the transform, and gives us an array of amplitude values — each corresponding to a frequency band. Every frame, the script reads the FFT output and calculates how strong each frequency band is — i.e. how much energy is present in that slice of sound. Then it uses that value to scale the height of each cube. The louder a frequency is, the taller the corresponding cube becomes. Because raw frequency data is very subtle, we amplify the scale visually (e.g., multiplying it by 10,000 and clamping the height). This creates a satisfying, pulsing effect that mirrors your speech patterns and the texture of your voice.

Development Challenges:

Since everything we used is new and released recently, there were barely any documentation and we had to figure it out on our own (from integrating the Gemini Live API to using Unity Sentis). The Quick Action Menu was also super difficult to implement. The logic is that I wanted the Quest to send the image data and the prompt directly to the Gemini API once the user presses one of the three buttons. However, Gemini keeps on answering the prompt based on the image from the audio feature. After debugging, I found out that Gemini's Live API doesn't prioritize text to process. Instead, it will spontaneously decide which data to process first. To bypass this, I had to send an audio recording through OpenAI's text-to-speech API, then to Gemini, which got the quick action menu working in the end.

Check Out Our Design Doc:

https://www.figma.com/design/yeGMYmhRm2KU2nPWI9q3aZ/IDSN-Veri-Sight?node-id=0-1&...

| Status | Released |

| Platforms | Android |

| Author | dannyhym |

| Tags | computer-vision, mixed-reality |

Download

Install instructions

Run the APK build on your Quest headset.

Comments

Log in with itch.io to leave a comment.

guys will this change the world?